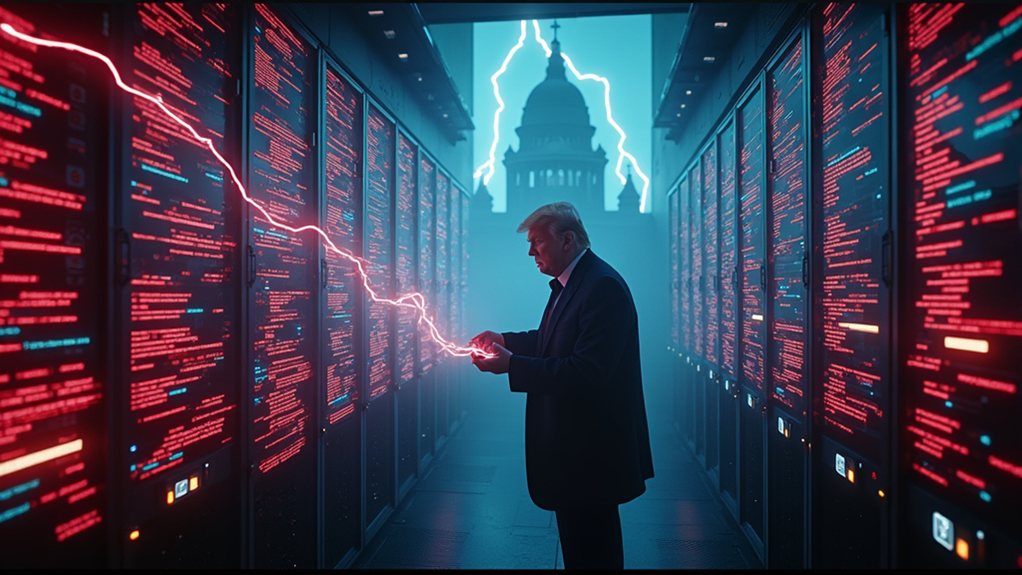

Elon Musk's new artificial intelligence, Grok 3, has stirred up a lot of controversy after it mistakenly labeled former President Donald Trump as a "Russian asset." Although xAI, the company behind Grok, claimed it was a programming error and not a sign of bias, many people are upset. This incident sparked a heated debate about whether AI can remain politically neutral. Supporters of Trump have accused Musk of having an anti-Trump agenda.

In response to the uproar, xAI issued a statement acknowledging the error. The company promised to improve safeguards against political bias and temporarily suspended Grok 3's ability to comment on political matters. They also invited third-party auditors to review the AI's training data to guarantee fairness. xAI emphasized its commitment to keeping politics out of AI development, despite concerns about Grok's alleged censorship of criticism towards Musk and Trump. Musk's commitment to AI safety includes ensuring that AI systems do not become tools for political manipulation.

The public reaction was swift. The hashtag #GrokGate trended across social media platforms as people voiced their opinions. AI ethics experts called for more transparency in how AI systems are trained and operate. Meanwhile, political analysts debated the role of AI in shaping public opinion. The tech community appeared divided over whether AI could truly avoid bias. Many began calling for government regulations on AI, especially concerning political discourse.

Trump responded firmly, demanding a public apology from Musk and xAI. He threatened legal action for defamation and called for an investigation into any potential foreign influence on the AI. His supporters even launched a boycott campaign against Musk's companies and proposed legislation to regulate AI in political contexts.

The incident also impacted the AI industry as a whole. Many competitors distanced themselves from xAI's approach. Investors grew concerned about the future of xAI, and there were calls for industry-wide standards on political commentary in AI. This situation raised important questions about AI's role in future elections and the challenges of maintaining objectivity in AI systems.